Overview

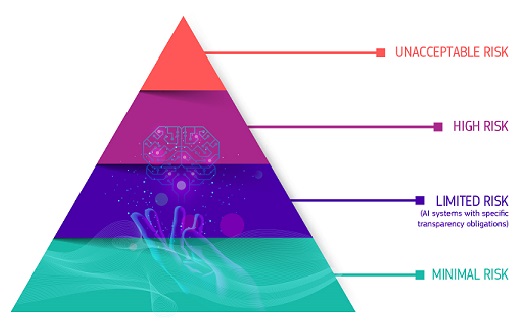

The AI Act, the first of its kind in Europe, aims to regulate artificial intelligence in a way that fosters trust, safety, and innovation. It introduces a risk-based framework that categorizes AI systems and imposes obligations depending on their potential impact on society.

In this article, we’ll explore what the AI Act is, what phase 2 means for your business starting in August 2025, and how Eminence can help you turn regulatory compliance into a strategic advantage.

What Is the AI Act?

The AI Act , adopted since 1rst of August 2024, is the Europe's and world’s first comprehensive regulation on artificial intelligence. The goal is to frame the development, marketing and use of artificial intelligence systems on the European market. It aims to ensure the development of ethical, human-centered artificial intelligence, while stimulating AI innovation and supporting the smooth functioning of the single market.

It adopts a risk-based model categorising AI into unacceptable, high-risk, limited, minimal, and general-purpose AI (GPAI).

- Unacceptable risk: all AI systems that are considered as a clear threat to the safety, livehoods an rights of people are banned outright.

- High-risk: AI systems that can pose serious risks to health, safety or fundamental rights are subject to strict transparency, quality, risk assessment, and documentation.

- Limited risk: AI systems that are not considered dangerous, but they still require a minimum level of transparency to protect users and build trust.

- Disclosure obligations: If someone is interacting with an AI system, like a chatbot or a virtual assistant, they must be clearly informed that they are talking to a machine. This helps users make informed decisions and avoid being misled.

- Generative AI content: If an AI creates content (text, image, video), the provider must make sure this content is recognizable as AI-generated.

- Minimal or no risk: not included in the AI Act

https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

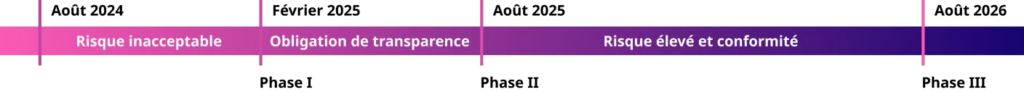

Key phases of the EU AI Act

The AI Act follows a phased deployment to give companies time to adapt, but with firm deadlines. In recent months, several major tech companies and even some EU member states called for a pause in the implementation of the legislation, citing a lack of clarity and the heavy compliance burden. However, the European Commission firmly rejected these requests.

On the beginning of Jully 2025, it confirmed that the AI Act will be enforced exactly as planned, underlining the EU’s commitment to responsible and trustworthy AI development.

Phase 1: February 2025 - Ban on unacceptable risk AI

This phase introduces a prohibition on AI systems that pose an unacceptable risk to fundamental rights and safety. These include:

- Social scoring systems (e.g. ranking citizens based on behavior or traits),

- Real-time biometric surveillance in public spaces (except under strict law enforcement conditions),

- Manipulative or exploitative AI, such as systems that target vulnerable populations.

These applications are considered incompatible with EU values and are strictly forbidden.

Phase 2: 2 August 2025 – Transparency obligations for GPAI systems

A crucial turning point for providers of General-Purpose AI (GPAI) models like large language models and image generators.

From this date, providers must:

- Clearly label AI-generated content, especially deepfakes or synthetic media intended for public communication.

- Disclose training data summaries, including any copyrighted content.

- Perform regular bias and toxicity tests to mitigate harmful outputs.

- Implement robustness and cybersecurity safeguards.

This phase introduces concrete obligations for transparency and accountability, especially for foundational AI technologies with broad reach.

Phase 3: August 2026 – High-risk AI systems & full compliance

This is the final phase, applying to high-risk AI systems across sectors such as:

- HR and recruitment,

- Education,

- Healthcare,

- Law enforcement and border control,

- Finance.

Obligations include:

- Passing conformity assessments before market entry,

- Implementing risk management and human oversight mechanisms,

- Ensuring full traceability, logging, and incident reporting.

Fines of up to €35 million or 7% of global revenue can be imposed for violations, highlighting the need for proactive compliance, especially for companies operating at scale.

🚨 Why it matters

Businesses must act now to align with the AI Act’s phases. The next 12 months are crucial for putting the right governance, documentation, and technical frameworks in place, not just to comply, but to build a foundation of trust, resilience, and ethical innovation.

The EU AI Act is not just a legal framework: it is a business transformation accelerator. It will reshape how companies build, deploy, and manage AI, requiring major adjustments across legal, technical, and operational teams.

Companies, especially those working with General-Purpose AI (GPAI) or high-risk AI applications, are facing an unprecedented compliance challenge. The expected Code of Practice, which will help clarify how to comply with GPAI obligations, is still under development and may only be available by late 2025. This forces companies to act in uncertainty, balancing innovation with legal risk.

-> Legal and product teams must collaborate now to anticipate documentation, transparency, and governance requirements before they’re formally detailed.

Turn compliance into opportunity

At Eminence, we don’t just help you comply with the EU AI Act but we help you transform regulation into a strategic advantage. While many businesses are still waiting for clarity or pushing for delays, we empower you to move early, build trust, and lead confidently in a regulated AI landscape.

Here’s how:

1. AI Readiness Audit & Risk Mapping

We start with a tailored audit of your current and planned AI systems to:

- Identify what qualifies as GPAI, high-risk, or limited-risk under the AI Act.

- Review and assess data practices, model outputs, and transparency obligations.

- Deliver a clear risk classification map and timeline based on your sector and use cases.

2. Compliance Documentation & Governance Framework

We help you build all required internal documentation, without slowing down your operations:

- Training data summaries and copyright reports for GPAI.

- Bias, toxicity, and robustness testing protocols.

- Human oversight procedures, escalation flows, and incident tracking models.

- Alignment with upcoming Code of Practice recommendations.

3. AI Conformity Process Implementation

For high-risk systems and GPAI providers, we support the full lifecycle of AI governance:

- Technical support for conformity assessments.

- Workflow integration: risk assessment steps embedded into your dev or product lifecycle.

- Collaboration with your legal and IT teams to implement EU-aligned design, monitoring, and testing practices.

4. Business Strategy & Market Positioning

Regulatory readiness is not just a cost: it’s a competitive advantage. We help you:

- Use transparency, fairness, and explainability as trust signals in your marketing and sales.

- Equip your teams to respond to RFPs or procurement checks that now require proof of AI compliance.

- Align your AI and data strategy with European values, making you a trusted player in public and private tenders.

5. Ongoing Monitoring & Future-Proofing

Regulation evolves, so do AI models. Eminence ensures you're ready not just for 2025, but for what comes next:

- Regular updates and workshops on regulatory changes (e.g. Consent strategy, code of Practice, sector-specific rules).

- Continuous monitoring tools to track the behavior and performance of AI models.

- Strategic foresight to align your AI innovation roadmap with upcoming legal and ethical expectations.

Subscribe to our newsletter and gain access to strategic insights, exclusive analyses, and expert tips to enhance your online presence.

Conclusion

Phase 2 of the EU AI Act kicks off in August 2025 with mandatory obligations for GPAI systems, followed by additional high-risk AI rules in August 2026. Companies face no delays or grace periods, and must act now. Turning compliance into a strategic asset involves proactive governance, transparency, and trust-driven differentiation.

Whether you're deploying customer-facing AI tools, working on internal automation, or managing GPAI applications, Eminence gives you the strategy, structure, and support you need to comply with the AI Act without compromising innovation.